This is part 1 of a 4-part series!

This post is the first in a 4-part series looking at the performance issues that GitKraken developers faced. This post outlines the problems themselves, and subsequent posts in the series will focus on how the solutions to each problem were developed.

Part 1: The Problems

It may sound obvious, but in order to improve an app, you have to identify the pain points and precisely what is causing them.

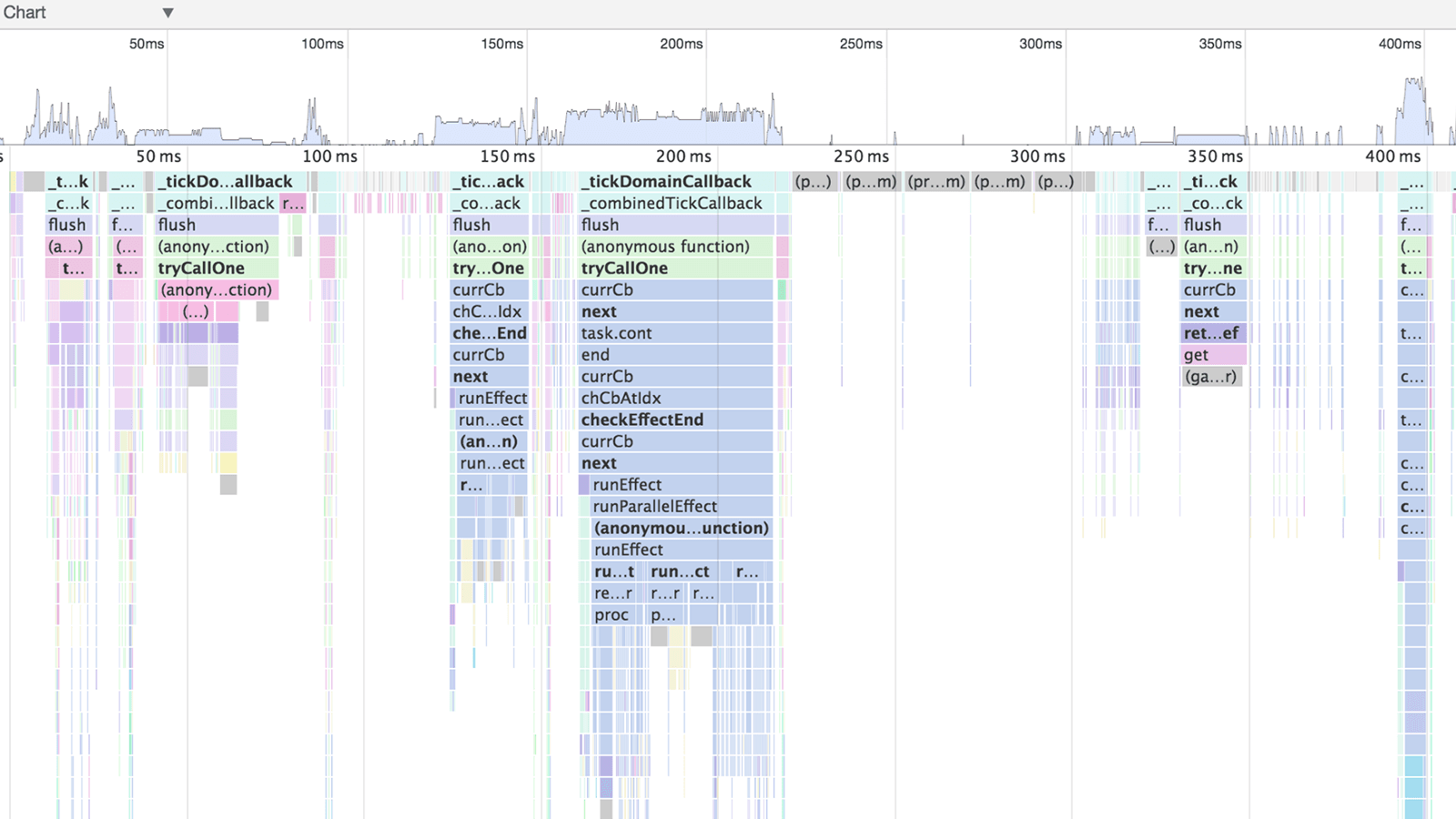

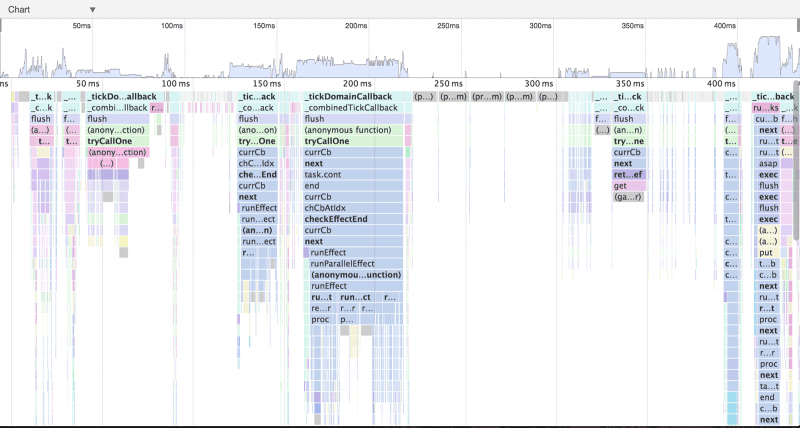

A major issue we noticed in GitKraken was that the more a repository grew, the more everything slowed down. There were some tools we used to get more specific with what was at the root of such performance degradation; such as the Chrome dev tools in Electron, which contain a profiler that’s handy for giving you an idea of where you’re spending most of your time.

A profile of an action in GitKraken

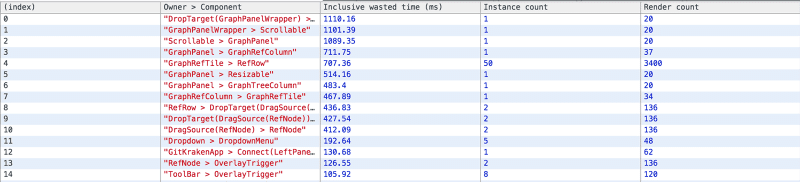

After some investigation, it was clear that the app was spending an unwelcome amount of time tied up in React processes, frequently rendering and updating. Thankfully, React has its own tools for discovering performance issues, so we were able to move over to that to get more granular with the issues. It turned out that most of the expended time was in the graph, and that most of it was what we in the software development industry like to call wasted time. Wasted time is probably exactly what you expect it is–it’s time spent in processes that weren’t required in the first place.

In the context of React, the process was to go through a whole render cycle comprised of pulling new data, updating components, rendering, and constructing a virtual DOM. In the end, you compare the actual DOM to the virtual DOM, and you may conclude that the two DOMs are the same. That’s wasted time because no actual DOM updates needed to happen, and you just performed a bunch of work for nothing. Nothing!

This scenario was starting to creep up into seconds of wasted time. A couple wasted seconds might not seem like very much, but in Computerland, seconds of wasted time is comparable to watching season 5 of Lost: it might seem like there’s a point to it, and you’ve come this far so you kind of need to see it through to completion, but in reality it’s taking an excruciating amount of time, becoming increasingly irritating and turns out to be a genuinely bad user experience.

Yikes.

Anyways… The point is, at this time, every action in GitKraken would cause graph renders. That’s every action, even if no refs changed (for example, if a new PR came through, or one of the timelines on the graph updated), a whole graph refresh would still be performed. The subsequent frequency of repository refreshes, alongside the graph rendering process itself being slow, made the whole app feel slow.

Attempted Solution #1: Unmounting the graph

We tried to remedy this by unmounting the graph as something was loading. So, during that process, the whole graph component would be removed from React. That increased how fast the repository would load, but as a result, the amount of special-case application of this method would make the app code far more complicated and less sustainable long-term.

Attempted Solution #2: Flux implementation and Immutable.js

In our Flux implementation at the time, we had a store for each domain, and as a domain was updated, that update would cause a refresh of the graph. But, if you had a big refresh coming through, with multiple domain updates, you’d get a cascading effect of a graph refresh being calculated with every one of those domain updates. To put that into an actual use-case context, refreshing a repository would essentially result in around 8 graph rerenders, producing significant performance consequences in the app.

How so? A quick background about how Flux operates: There is a dispatch of data, and that dispatch goes from store to store, updating things as it goes. Each store—if its data changes—emits an event saying that some data has changed. React then responds to this event, grabbing the new data from the store and performing a render process.

This is all well and good, but the kicker here is that no subsequent store would update until that render process was fully resolved. So, for a single dispatch of data that updated multiple stores, this chaining effect would get costly. This was a fundamental bottleneck in our implementation of Flux.

This performance hit was compounded by the rendering process itself. When you grabbed new data from the store, the store would give you a deep copy of the data rather than its actual original data, to protect that original data from any mutations that may be caused by naïvely-written React components. We’ve since repented for our sins and now follow the one true path.

This deep copying proved to be expensive. When a component would get that data copy, it would perform a deep comparison between that data (copied from the store) and the data it already had, to ascertain if an update was necessary.

Though somewhat of a time-saver in the respect that it worked out whether or not an update needed to be performed, this check was in and of itself very expensive. However, this deep comparison was actually faster on average than just doing the update. All the rows (a commit in the graph would be considered a row), each made of multiple components and subcomponents, were causing multiple verifications that their data was the same. Faster, but still an expensive chain of events.

So, we decided to bring in a library called Immutable.js, which made immutable arrays and objects, allowing us to quickly compare if part of the object had changed because we could do a quick memory address comparison to see if that changed. Although this helped, it was extremely unwieldy to get shoehorned into our existing infrastructure without breaking ‘a lot of stuff’, and (you guessed it!) it was really slow to update objects. Even when batching updates using the built-in methods. This made our updates to the data actually take longer than the renders, so we had to ditch using Immutable as a solution. Womp womp.

Attempted Solution #3 (Bonus Fail): PureScript

We tried migrating lots of stuff over to PureScript. However, once we got started, we soon realized that this wasn’t going to be the right fit for our team.

So, by this point, we had established 3 solid areas that were pain points, causing performance issues that we needed to remedy:

- How we modified the state of the application with new data that came through.

- Retrieving data out of stores.

- Determining how to update components in the UI in as fast and efficient a way as possible.

These were the main 3 points that each required significant rethinking in how we were building the app.

The next 3 parts of this blog post series will focus on each of these issues respectively, the solutions we implemented, and how we implemented them.

GitKraken MCP

GitKraken MCP GitKraken Insights

GitKraken Insights Dev Team Automations

Dev Team Automations AI & Security Controls

AI & Security Controls